As a software developer, sometimes I come into situation when I have to code in all layers/tiers of the application. In the web development context using Microsoft technology (where most of my experience is in), it means writing code in Javascript, deal with divs and tables in HTML, write business logic in C# and all the way down to writing stored procedures. Working in this manner is nothing bad, since I can get the full picture of the process, from the moment the user input the data to the data being saved in the database. Quite surprisingly, this happens in most of the companies that I have worked for, regardless of the project size.

The multi-responsibility role that a developer has to bear is quite common nowadays. Take a peak at the online job posts and you can easily notice that most developer job openings always look for the all-rounder candidate who can do from A-Z and has experience working in all tiers.

I agree in a small development project, we don't have the luxury of proper design and planning. Thus work items are actually screens from a prototype, and often a single developer is assigned to code the screen from UI tier to database tier. However, when the project gets larger and more developers join in, I suggest developers are split into several distinct roles:

1. UI/Front-end developers. These people are most experienced in event-driven nature of UI programming. In the web development projects, these are the type of developers who are fluent in client side scripting, prefer to hand code HTML code, fully understand the difference between a listbox and a dropdownlist, etc.

2. Middle tier developers. These people deals with business object classes, web services, and data access layer classes.

3. Database developers. They live in different side of the world than the other two types of developers. They speak only in TSQL. Their tools is Enterprise Manager and Query Analyzer instead of Visual Studio.

Those projects who have clear separation of roles enjoy the following benefits (which are derived from my experience working in such project):

1. The right man on the right place. Almost like a cliche, but it is true. Most developers will say 'yes' they can work in every tier, but in fact they are more effective working in one tier compared to other tiers. A developer's past experience can tell much about this. Face this fact: developers who write well in object-oriented Javascript may not effectively write a business object classes in C#, or even write a sophisticated stored procedure in TSQL with proper error handling management.

2. Promote separation of responsibility on each tier, an important concept in object orientation. Alhough only by a proper design a truly separation of responsibility can be achieved, having different developers on each tier will ensure there is no code that sit in the wrong place because they are written by different developers.

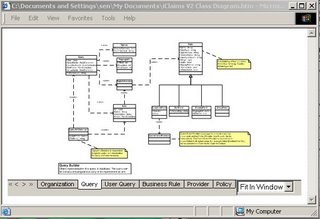

3. Each tier can be planned and progressed independently. For example, after the database design is done, the database developers can start working on the stored procedures. Meanwhile, once the class diagram is done, the middle tier guys can start creating classes. Usually, the UI developers will start later and finish later as they have to work on the prototype and go through iterative release-feedback process with the client.

4. Easier to implement programming standard and convention. Take this example: Naming convention for strongly-typed C# is different from loosely-typed Javascript. Syntax in TSQL are more effective in resultset, while C# developers are more used to loops.

5. Promote communication. In my previous company, developers on the same role sit next to each other on the same corner, thus promoting communication and code reuse among them. In a 20-people team which everybody works on the stored procedures, not everybody know what other people have done.

As I mention earlier, the separation of roles may not be suitable for a small project where resource is limited. It is also not an all-good solution. The following are some disadvantages:

1. Developers may know understand the whole picture as they only work in one tier instead of all tiers.

2. Harder to track bugs and performance issues that run across tiers and other performance issues because of point no 1. If bug tracking is not manage properly, developers may start finger pointing on each other.